In today’s fast-paced business world, real-time data is the lifeblood of informed decisions. Whether it’s optimizing pricing (82% of retail organizations in the U.S. are data-driven businesses – Exasol), fueling sales initiatives (69% of businesses are increasing their investment in personalization – Segment), or transforming marketing campaigns (64% of marketers leverage data for targeted campaigns – Forbes), accurate and timely data from diverse sources is crucial.

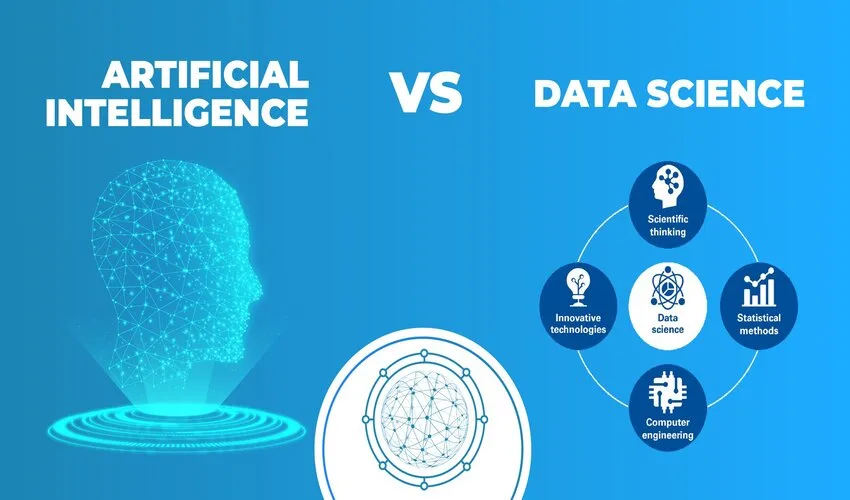

This is where web scraping comes in. This powerful technique extracts vital data from the web, giving you a treasure trove of insights to inform your strategies. But with the recent hype surrounding artificial intelligence (AI), a question arises: does AI-powered web scraping truly enhance the process, or is manual scraping still the way to go?

Pros of AI-powered Web Scraping

1. Efficiency and speed

- Automated data extraction

AI-powered scraping automates the retrieval of information from websites, thus ensuring a faster and more efficient workflow.

- Handling large volumes of data quickly

AI-driven web scraping processes large datasets at scale, providing you with the capacity to gather, analyze, and utilize extensive volumes of data in real-time.

2. Accuracy and precision

- Reduced errors

By relying on algorithms and machine learning models, AI ensures a higher level of accuracy, minimizing the risk of mistakes that may occur during manual data extraction.

- Enhanced data quality and reliability

The precision of algorithms ensures that the extracted data is of high quality, allowing you to make informed decisions based on trustworthy information, free from inconsistencies.

3. Scalability

- Ability to scale operations seamlessly

As data requirements grow, AI systems can seamlessly scale operations without a proportional increase in resources. This enables you to adapt to changing needs and handle growing datasets efficiently.

- Handling diverse and dynamic websites

Traditional scraping methods may struggle with changes in website structures, but AI algorithms can adapt, recognizing patterns and adjusting extraction techniques to suit the evolving nature of websites.

4. Enhanced data analysis capabilities

- Integration with analytical tools

AI-generated data can seamlessly integrate with various analytical tools and platforms. This facilitates in-depth analysis, trend identification, and insights derivation from the collected data, empowering businesses to make informed decisions.

- Synergistic machine learning enhancements

AI-powered web scraping can be coupled with machine learning models to uncover patterns, correlations, and predictive insights from the extracted data. This synergistic approach enhances the overall analytical capabilities of the scraping process.

Use cases of how AI-scraped data can benefit industries

eCommerce and retail

- Real-time price monitoring across platforms

- Optimization of pricing strategies

- Competitor product launch tracking

Finance and investment

- Monitoring market trends and sentiment analysis

- Informed investment decisions

- Forecasting stock prices and market movements

Healthcare

- Extracting information from scientific literature and clinical trials

- Identifying potential drug candidates

- Staying updated on the latest medical advancements

Marketing and advertising

- Social media sentiment analysis

- Monitoring consumer preferences and trends

- Targeted advertising based on insights

Supply chain and logistics

- Supply chain optimization through demand forecasting

- Efficient inventory management

- Monitoring supplier information

Real estate

- Analyzing property listings and market trends

- Informed property investments

- Identifying emerging markets and demand patterns

Travel and hospitality

- Price comparison

- Sentiment analysis on customer reviews

- Enhancing customer experiences based on feedback

Human resources

- Insights into job market trends and competitor hiring practices

- Attracting top talent through informed recruitment strategies

- Adjusting hiring practices based on market demands

Concerns associated with AI-driven web scraping

1. Ethical concerns

- Privacy issues and data misuse

AI-powered web scraping raises ethical concerns related to the privacy of individuals whose data is being scraped. The automated nature of AI may lead to the unintentional extraction of sensitive information, necessitating strict adherence to data protection regulations and privacy standards.

- Adherence to ethical scraping practices

Ensuring ethical scraping practices is crucial to avoid unauthorized access, data breaches, or misuse of information. The responsibility lies with organizations and developers to establish and follow ethical guidelines, respecting the boundaries of data ownership and usage.

2. Initial setup costs

- Investments in AI technology and infrastructure

Adopting AI-powered web scraping involves significant upfront costs related to the acquisition of technology and infrastructure. Investment in robust systems capable of handling the complexities of web scraping is required.

- Training and maintenance expenses

Ongoing training and maintenance of AI systems add to the overall costs of implementing web scraping. Continuous updates and improvements are necessary to keep the AI algorithms effective.

3. Complex implementation and integration

- Technical expertise required

Implementing AI-based web scraping requires a certain level of technical expertise. Organizations may need skilled professionals who understand web scraping techniques and AI algorithms, adding to the complexity and potential recruitment challenges.

- Integration challenges with existing systems

Integrating AI scraping tools with existing systems can be challenging. Compatibility issues and the need for system modifications may arise, potentially disrupting current workflows and requiring additional investments.

4. Limited contextual understanding

- Challenges in contextual interpretation

AI may struggle with nuanced contextual understanding, especially in areas where human intuition and contextual knowledge play a crucial role. Extracted data might lack the depth of interpretation that a human analyst could provide.

- Difficulty in resolving ambiguities

Ambiguous website content or changes in context may lead to misinterpretations by AI algorithms. Resolving these ambiguities often requires human intervention to ensure accurate and meaningful data extraction.

5. Dependence on Data Availability

- Vulnerability to website changes

AI models are trained on historical data, and sudden changes in website structures can disrupt their effectiveness. Adapting to frequent changes requires continuous monitoring and adjustments, which may be time-consuming and resource-intensive.

- Reliance on accessible data

AI-based scraping relies on data accessibility. Websites employing anti-scraping measures, such as CAPTCHAs or IP blocking, can hinder the efficiency of AI tools, necessitating countermeasures and potentially compromising the scraping process.

Attaining a balance between AI and human-based scraping

The importance of human involvement

While AI excels at automating routine tasks, human involvement remains crucial for handling nuanced and complex aspects of extraction. Manual scraping allows experts to navigate intricacies that automated algorithms may overlook, ensuring a deeper understanding of the data and its context.

Data extraction companies and professionals with domain knowledge can interpret ambiguous information, adapt to changes, and address challenges that AI alone may find challenging. The synergy of human insight and AI automation enhances the overall quality and reliability of the extraction process.

Customization and flexibility

Customization is key in web scraping, and manual methods offer a level of flexibility that AI algorithms may lack. Human operators can adapt scraping strategies based on the specific requirements of your project, addressing unique challenges and ensuring the extraction of relevant and accurate data.

The most effective approach often involves a harmonious blend of AI and manual scraping. Leveraging AI for large-scale, repetitive tasks and manual expertise for intricate or evolving scenarios can help you achieve optimal results.

Selecting the right approach for the job

The decision between manual and AI scraping should be guided by specific project requirements. Criteria such as data complexity, website structure, and the need for real-time adaptability should be considered. Manual methods may be preferred for tasks requiring a nuanced touch, while AI excels in handling large-scale, repetitive tasks.

Web data extraction service providers play a vital role in promoting a balanced approach. A responsible provider understands the strengths and limitations of both manual and AI methods, guiding you in selecting the most suitable approach for your unique needs. Such partners prioritize ethical practices, legal compliance, and ongoing support for a seamless scraping experience.

Conclusion

The evolution of web scraping, particularly with the integration of AI, marks a transformative phase in data extraction. While AI-driven scraping showcases remarkable efficiency, accuracy, and scalability, the synergy of human insight remains irreplaceable.

Businesses navigating the complexities of data extraction must strike a delicate balance between the strengths of AI automation and the nuanced understanding provided by human expertise. The most effective approach emerges from a harmonious blend of these methods, leveraging AI for repetitive tasks and human expertise for contextual interpretation and adaptability.

As industries continue to harness the power of web data extraction, responsible practices, ethical considerations, and a pragmatic approach to selecting scraping methods will define success. Providers equipped with a comprehensive understanding of AI and manual scraping techniques stand as pivotal allies, guiding businesses toward optimal choices tailored to their unique needs.

In this dynamic landscape of data extraction, the journey towards informed decision-making is an amalgamation of technological prowess, ethical considerations, and human finesse. Striking this delicate balance is the cornerstone of unlocking the true potential of web data extraction for informed strategies and sustainable growth.

Ella Wilson is a Sr. content strategist at SunTec India – a leading IT and business process outsourcing company. With 10+ years of experience, her expertise centers around various data services, especially data support, data entry, and data annotation. Moreover, possessing a comprehensive understanding of photo editing solutions, she specializes in creating informative and compelling content around real estate photo editing, HDR photo editing, and photo retouching. As a digital marketing enthusiast, she keeps herself updated with the latest technological advancements to keep her write-ups relevant, engaging and up-to-date.

Leave a Reply