High-performance computing systems power everything from weather forecasting to drug discovery. Yet most organizations leave massive performance gains on the table. Small tweaks to your existing infrastructure can unlock hidden potential and deliver results fast. A lot of teams end up sinking months into huge redesigns when, in reality, a few small tweaks could fix their biggest slowdowns right away. Often, the gap between a system that crawls and one that flies comes down to the way it’s set up. With that in mind, these seven quick optimization ideas take hardly any effort but can still make a noticeable difference.

Let’s explore the practical changes that transform HPC performance immediately.

1. Parallel Processing Makes Everything Faster

Your CPU cores sit idle while your code runs on a single thread. This wastes the computational power you already paid for. Modern processors pack multiple cores into every chip. Running tasks sequentially means only one core works while others stay dormant. By integrating HPC into the middle of your workflow, parallel processing splits workloads across all available cores.

Breaking Down Tasks for Maximum Speed

Most scientific applications can be split into smaller chunks. Weather models process different atmospheric layers independently. Molecular dynamics simulations handle particle interactions in parallel. Financial risk calculations evaluate thousands of scenarios simultaneously.

- Start by identifying independent operations in your workflow.

- Loop iterations that don’t depend on previous results make perfect candidates.

- Data processing tasks that analyze separate files can run concurrently.

- Testing parallel execution on one bottleneck proves the concept before wider implementation.

2. Memory Bandwidth Determines Real-World Performance

Your CPU is capable of performing calculations quickly than the memory can supply data. This results in a bottleneck that leads to a reduction in the speed of the entire process.

CPUs execute billions of operations per second. Memory systems struggle to keep pace. When processors wait for data, they burn cycles doing nothing productive. Optimizing memory access patterns eliminates this waste.

Cache-aware programming keeps frequently used data close to the processor. Organizing arrays in memory-friendly layouts reduces fetch times. Prefetching loads data before the processor needs it.

- Align data structures to cache line boundaries.

- Access array elements in sequential order.

- Minimize pointer chasing through linked structures.

- Use structure-of-arrays instead of array-of-structures.

- Batch similar operations together.

These changes require no new hardware. You simply reorganize how your code accesses existing memory. The performance improvement shows up instantly in reduced execution time.

As per a report, the market of HPC computing is continuously growing, especially in IT. The total market share is expected to cross $49.9 billion by 2027

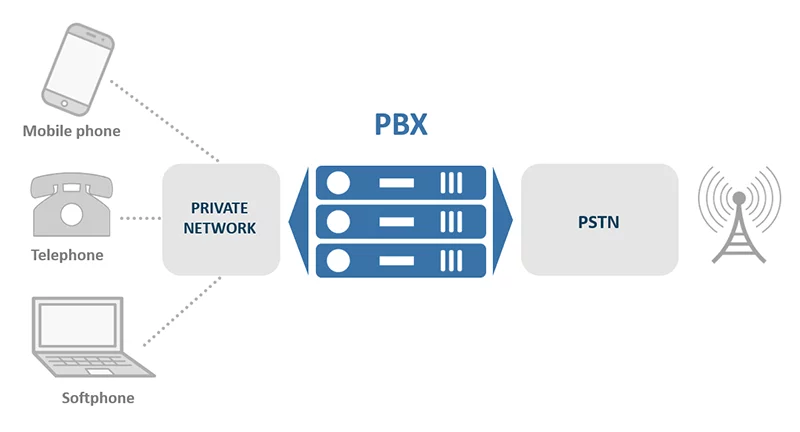

3. Network Topology Optimization Cuts Communication Overhead

Distributed HPC systems spend significant time moving data between nodes. Poor network configuration turns this into a major bottleneck.

Message passing between nodes creates overhead. Every communication event adds latency. Minimizing these exchanges speeds up distributed applications dramatically.

Reducing Communication Frequency

Bulk data transfers are superior to sending small messages frequently in every aspect. The cost of sending a large packet is much lower than that of sending 100 small ones. The delay in the network is concealed by the overlapping of computation with communication.

Non-blocking communication lets processors continue working while data moves. Asynchronous operations prevent idle waiting. Collective operations like broadcast and reduce optimize common patterns.

4. Compiler Optimization Flags Unlock Hidden Performance

Default compiler settings prioritize safety over speed. Enabling optimization flags produces faster executables without code changes.

Compilers apply hundreds of transformations to your source code. Loop unrolling reduces overhead. Function inlining eliminates call costs. Vectorization processes multiple data elements simultaneously.

Level 2 optimization (-O2) provides substantial gains with minimal risk. Level 3 (-O3) enables aggressive optimizations. Profile-guided optimization (PGO) tailors executables to actual usage patterns.

- Enable auto-vectorization for SIMD instructions.

- Use link-time optimization for cross-file improvements.

- Target specific CPU architectures with -march flags.

- Apply fast math operations when precision allows.

- Remove debug symbols from production builds.

5. I/O Buffering Prevents Storage From Slowing You Down

Reading and writing data one byte at a time cripples performance. Buffered I/O batches operations and reduces system calls.

Storage devices work best with large sequential transfers. Small random accesses waste bandwidth. Buffering accumulates data before writing and reads ahead during input.

- Use memory-mapped files for random access patterns.

- Implement double buffering to overlap I/O with computation.

- Choose appropriate stripe sizes for parallel file systems.

- Aggregate small writes into larger operations.

- Disable unnecessary metadata updates.

Moving temporary files to local SSDs instead of network storage delivers instant improvements. Applications spend less time waiting for disk operations.

6. Workload Scheduling Maximizes Resource Utilization

Running jobs randomly wastes resources and extends completion times. Smart scheduling keeps hardware busy and reduces queuing delays.

Priority settings ensure critical work runs first. Resource reservations guarantee availability for time-sensitive jobs. Fair-share policies prevent single users from monopolizing systems.

Understanding your workload patterns helps optimize scheduling. Short jobs benefit from dedicated queues. Long-running simulations need checkpoint-restart capabilities. Interactive work requires responsive allocation.

7. Profiling Identifies Your Actual Bottlenecks

Guessing which code sections need optimization wastes effort. Profilers reveal where programs actually spend time.

Your intuition about performance hotspots is probably wrong. Profiling tools measure execution time objectively. They show which functions consume the most cycles.

- Sample-based profiling adds minimal overhead.

- Instrumentation profiling gives exact counts.

- Hardware counters reveal cache misses and branch mispredictions.

- Flame graphs visualize call stacks and time distribution.

- Line-level profiling pinpoints specific hotspots.

Running a profiler takes minutes. The data guides optimization efforts toward changes that actually matter. You stop wasting time on code sections that contribute little to the overall runtime.

Conclusion

These seven optimizations deliver measurable improvements without requiring infrastructure changes. You can implement several of them this week and see results immediately.

Start with profiling to identify your biggest bottlenecks. Apply parallel processing to the most time-consuming sections. Enable compiler optimizations and rebuild your applications. Each change compounds with others to create substantial cumulative gains.

Your HPC infrastructure already contains untapped potential. These practical strategies unlock performance today. Stop waiting for budget approval on new hardware and start optimizing what you already have.